Data centers have become a fundamental part of society and a vital component of most businesses. Today they are connected in increasingly agile and dynamic ways and data center interconnect (DCI) technology is now the driving force for most innovation and investment in the optical networking market. But it hasn’t always been that way.

The DCI journey began about 20 years ago and our team at ADVA were involved from the very start. In the mid-90s, one of our first clients was a financial institution looking to connect two data centers for business continuity and disaster recovery (BC/DR). This early involvement is why we’re sometimes called the original DCI company.

Of course, things were a lot more static at this time and we certainly weren’t talking hyperscale and hundreds of Gigabits. This was about services like ESCON and Fibre Channel in the range of hundreds of Megabits connecting the two sides. Low latency was key for synchronous mirroring and so our first steps involved reducing this as much as possible – something that still plays a crucial role today.

Naturally for BC/DR, high availability was another fundamental requirement, in those early days. And so it’s continued to be. Then, around five years ago, security and encryption joined the list of DCI priorities.

Recently the focus has shifted to hyperscale. This isn’t necessarily limited to building huge data centers but is more concerned with the architecture and approach used. For hyperscale, hardware and software architecture must be able to scale from a small implementation to a gigantic facility without interruption or a change to the fundamental technology.

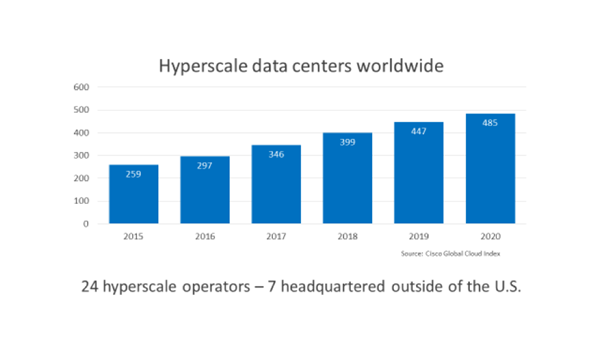

So how many of these facilities currently exist? According to a Global Cloud Index study there are around 350 hyperscale data centers operated by 24 companies, mostly headquartered in the US.

By 2020, it’s predicted there will be 500 hyperscale facilities housing half of the world’s servers and processing about 70% of global data. Furthermore, 60% of data will be stored in these data centers, which will have around half of all data center traffic. This means that these facilities will account for around 50% of global DCI traffic.

So while BC/DR applications are still important and growing, the hyperscale side is far more significant. The main drivers for this include the mass adoption of cloud-based services now that barriers such as security, availability, regulation and operational issues have largely been removed. Netflix, for example, have moved away from using their own data centers and now host all of their content in the cloud with Amazon. Even financial institutions such as Deutsche Bank are beginning to move mission-critical data to the cloud.

Video becoming an everyday part of life has had its influence too. People expect to see multimedia content online and – stopping short of the animated daily newspapers seen in Harry Potter – video content that launches immediately is now a staple component of online news.

Smartphone vendors are another contributor; with each new handset comes higher resolution images and, as is now the norm, live pictures with a short video behind the image. This fuels young people’s desire to create personal content and consume others’, generating the need for more storage and more connectivity, and ultimately more data centers following the hyperscale approach.

Then there’s the internet of things and big data, which are really two sides of the same coin. The critical challenge here is using data while it’s still in motion and extracting valuable information from it. This needs to be compiled and analyzed, sometimes within milliseconds, putting a whole new set of requirements on the data center landscape. That’s why many functions will ultimately need to be pushed out to the network edge.

Artificial intelligence and machine learning will add further pressure. To accommodate this, some hyperscale operators, including Google and Microsoft, are now deploying a new class of processor called a Tensor Processing Unit (TPU).

Achieving the necessary increases in performance becomes even harder if we believe those who say that Moore’s Law is slowing down and that the regular doubling of computer processing speeds every two years will no longer be possible. This will mean more enterprises will struggle with short upgrade cycles and more high-end processors will sit in the cloud, driving the data center business even more.

So, is the future going to see ever more of these enormous hyperscale facilities? I don’t believe it’s that simple. Proximity will become more significant. Given the need for latency-critical, high-capacity services, together with increasingly stringent security regulations, it’s far more likely that the next big trend will be towards edge data centers. And I’ll be explaining exactly where we are in this process next time.