Telco operators see many benefits to network functions virtualization (NFV). In short, the goal is to bring the advantages of the cloud to telco operators. One of the big benefits is the wide array of available support software, including Linux, Kernel-based Virtual Machine (KVM), Open vSwitch and OpenStack. These software packages provide a base of well-known functionality upon which operators can build innovative new virtualized services.

However, the telco network is not the same as the data center, so cloud-centric solutions may not transfer directly. In particular, operators are concerned about OpenStack regarding its scalability and resiliency. How do we enable virtualized services to be deployed at scale when using OpenStack?

What’s Wrong With OpenStack?

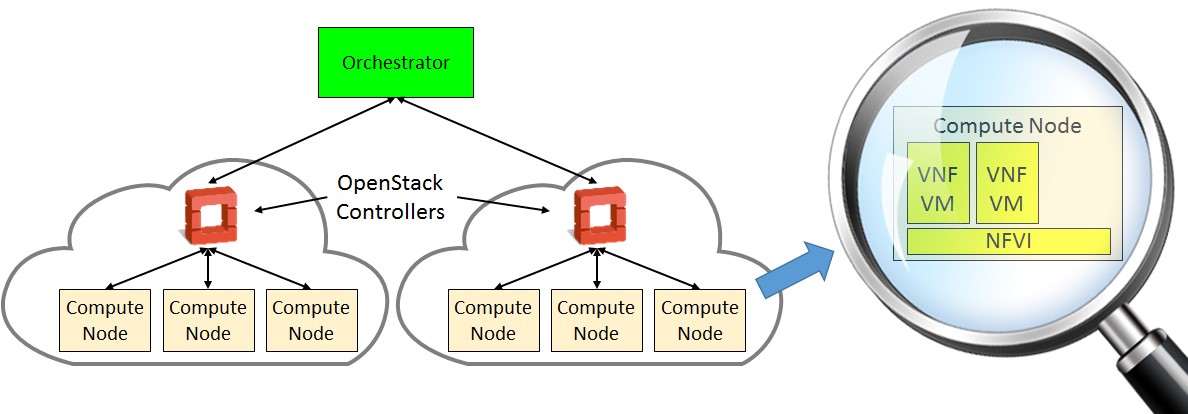

In the case of a centralized model, the answer is plenty, according to BT’s Peter Willis. In a centralized model (shown below), each central OpenStack controller (in red in the following image) is connected to a number of remote compute nodes, as shown below:

In October 2015, Willis presented a famous enumeration of six issues at the SDN & OpenFlow World Congress in Dusseldorf. Those issues include:

- Maintaining port numbers. Earlier versions of OpenStack did not have a predictable assignment of virtual ports to NICs. This created problems in control and maintenance of service chains. This issue can be overcome by Ensemble Orchestrator or by using Kilo or a later version of OpenStack and its associated version of KVM.

- Service chain modification. Earlier versions of OpenStack had limitations that necessitated the deletion and recreation of a service chain in order to insert or remove a VNF. This issue is addressed in Kilo and later versions of OpenStack and KVM.

- Lack of scalability. Willis estimates that an OpenStack controller is capable of managing about 500 computing nodes. For a large network that means a lot of servers dedicated to running OpenStack.

- Startup storms. The input/output load of a compute node on OpenStack is much higher at start up than at steady state. This exacerbates the scaling problem previously described.

- Security. Willis reported that supporting a connection from an OpenStack controller to a remote compute node required 500 holes to be opened in a firewall. That situation is not secure or maintainable.

- Forwards/backwards compatibility. The interface between OpenStack and the Neutron agent on the compute nodes is not guaranteed to be compatible across versions. This is not an issue in a data center where virtual machines (VMs) can be moved off control and compute nodes, which can then be upgraded. For a distributed model, upgrading either end could cause a loss of communication and it's not feasible to upgrade both at the same time.

Cloud in a Box

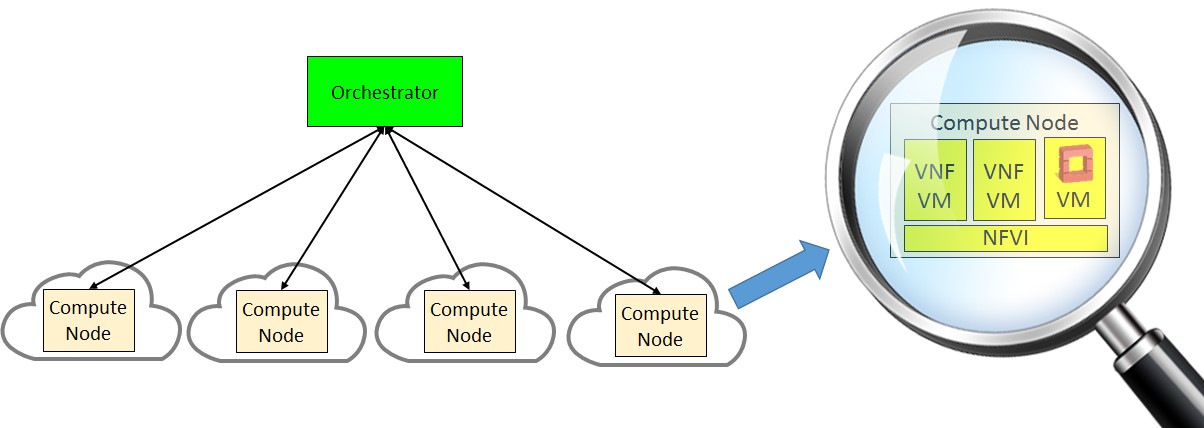

Willis was not the first or only person to identify the issues with OpenStack. In mid-2015, we worked with Masergy to build the first commercial deployment of NFV in the access network for the vCPE application. During this process, Tony Pardini of Masergy wanted to move from a centralized mode to a distributed mode. In a distributed mode, each compute node has an instance of OpenStack controller creating a “cloud in a box” as shown below:

Tony had two main reasons for moving to a distributed model:

Goal 1: Protect management and control networks. He didn’t want to transport and expose the OpenStack management and control networks across the access network. These interfaces are fragile and insecure, as described above.

Goal 2: Consistent API. Tony wanted the virtualization API to be consistent at the customer site and the data center. This is really important because it allows developers to write applications that work with both the distributed and centralized models of NFV (i.e. location independence).

There are some potential difficulties to solve for the cloud-in-a-box model:

Issue 1: Size. OpenStack has a significant footprint for memory and storage. This is not an issue in a centralized deployment where OpenStack is hosted in a data center. It can become an issue when you move out to the access part of the network. In the short term, we added memory to support this model for Masergy. Over time, we will create a slimmed down build of OpenStack and perhaps run it in a container.

Issue 2: Integration. OpenStack is typically installed on bare metal. Doing so complicates integration with the NFV infrastructure (NFVI). We solved this by running the OpenStack controller in a VM. In the future, we expect to move the OpenStack controller into a container.

Issue 3: Increase in OpenStack Nodes. While the cloud-in-a-box approach solves one set of issues, it creates another: a significant increase in the number of OpenStack instances. Fortunately, we have a solution for this new issue: DevOps methods and a horizontally scalable orchestrator. Please see here for more on Ensemble Orchestrator.

Having solved those problems, let’s look at how the cloud-in-a-box model addresses some of the issues of OpenStack.

- Lack of scalability. Now each instance of OpenStack is controlling only one compute mode. Problem solved.

- Startup storms. Ditto.

- Security. The orchestrator needs only a single port to communicate with each instance of OpenStack, so the security is greatly enhanced.

- Forwards/backwards compatibility. The instance of OpenStack and the Neutron agent can be upgraded simultaneously. Furthermore, each node can be upgraded individually, simplifying the process of managing the upgrade.

In addition to the benefits of solving the listed problems, there’s another big advantage to this approach. By providing a completely integrated solution including OpenStack, operators don’t have to understand the internals of OpenStack and can focus on the northbound interfaces only.

Result: A Scalable and Supportable Model for OpenStack

By moving the OpenStack controller onto the compute node we are able to solve many of the issues noted by service providers. As a result, they can now deploy virtualized services at scale, located in the access network and in the cloud, all while continuing to use the familiar tool of OpenStack.