Deploying Network Functions Virtualization (NFV) at the edge of the network provides many benefits for deployments and supported services. By pushing virtualization to a customer or cell site, an operator can support end user network services that require localized functions such as dual-homed routing, WAN optimization, site security and WiFi control. These end user network services require network functions to be deployed at the service edge. However, there is another type of service that may benefit from this deployment model: compute resources at the service edge for onsite managed hosting or “micro-clouds” that are consumed directly by the end user.

First: Why Push Virtualization to the Service Edge of the Network?

There are two solid reasons for deploying NFV at the service edge: operational/cost drivers and service requirements. The technology and cost drivers include the following:

- First Cost. Putting the virtualization resources at the service edge minimizes the initial investment because there is no need to build out centralized infrastructure before deploying virtualized services. In other words, the resources are deployed when each customer is added.

- Minimal Change to Deployment Model. When virtualized services are deployed at the service edge there is little or no need to change the core infrastructure. Instead, the operator is replacing one or more appliances with a server and software at the edge.

The other set of drivers are end user services that may require NFV to be deployed at the service edge, including:

- Dual-home routing. This can include single-network failover methods such as HSRP, as well as dual-network scenarios that connect to two different service providers. In either case, a local routing decision is required at the service edge, which calls for a virtual router to be deployed there.

- Site security. A security-conscious customer will want all traffic encrypted before it leaves the building. The service must include security functions deployed onsite.

- WAN optimization. In the case where a low-speed link is used to reach the service edge it is required that WAN optimization be deployed at that choke point. Otherwise, the benefits of reducing WAN traffic load won’t be realized.

- WiFi control. A managed WiFi control service should remain operational, even if there is a network issue that disables the uplink. Otherwise, basic network operation would be shut down at the customer site.

- Diagnostics and analysis. In order to troubleshoot a problem with classification or prioritization of traffic it is necessary to examine the packets at the point where these operations occur. This means locating analysis or troubleshooting tools like packet capture, DPI and/or traffic generation at the service edge.

The listed services are all provided and managed by the CSP today. But there is another interesting and innovative case that is enabled when we place virtualization resources at the service edge.

Micro-Clouds: Managed Hosting, on Site

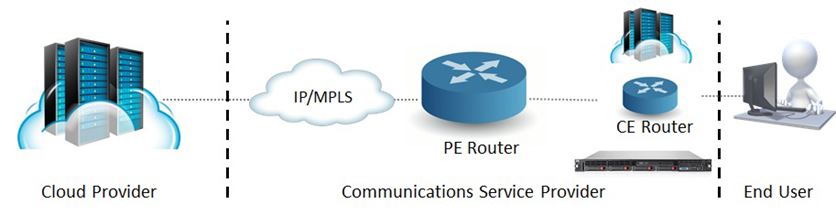

The value of Infrastructure as a Service (IaaS) as a cloud delivery platform has been widely discussed, as have the complexities when such a service is located in a data center. A typical cloud IaaS application is shown in the following diagram:

The complexities of this situation create an opportunity for the CSP. What if the CSP provided a micro-cloud IaaS service located directly at the service edge? In the following example a compute node is hosting both a virtual router as well as a micro cloud.

There are limitations to the types of applications the end user can load. However, service chaining and distributed applications can help.

Service chaining ensures that only the necessary functions are hosted at the service edge. For example, security functions might be hosted at the service edge and chained to VPN functionality hosted in a metro data center. This allows customer requirements to be met and the resources minimized at the service edge.

Distributed applications perform a similar division of labor for the end user. Consider the case of network authentication for hotels. Today, there are two parts to the implementation:

- An application hosted in a central cloud that performs a lookup or billing function based on the credentials of the hotel guest.

- An appliance that allows or blocks access based on input from the cloud application.

With the availability of a micro-cloud IaaS service at the service edge, the appliance could be replaced by a software application loaded by the hotel. The authentication application would remain in a centralized cloud, and the hotel would have the benefit of not having to maintain an appliance at each hotel.

Flexible Models for Deployment Are Key to Service Innovation

You may choose to push virtualization to the edge of the network to enable cost-effective and dynamic service delivery. It’s even more advantageous to use NFV technology to create net new services that provide innovation for the direct benefit of end users, as well as for the use by operators in constructing new services.

Flexibility is the key. The full benefit of NFV can only be realized if all deployment models are available, including deployment at the service edge.