Multi-access edge computing (MEC) is expected to play a strategic role as networks transform, bringing new levels of performance and access to mobile, wireless, and wired networks in support of future services and applications. A key goal of MEC is to reduce network congestion and improve application performance by moving functions, content and processing closer to the subscriber. By doing so, network operators will be able to better utilize network resources while also improving the delivery of content and applications to provide end users with a better quality of experience through lower latency and higher bandwidth.

Where is the edge?

The distribution of compute and storage will likely result in additional costs to network operators. Therefore, consideration must be given to both its location and sizing based on the applications and services profiles it is expected to support. As a result, there could be multiple edges depending on the type of traffic. It could be at the base station, small cells, Wi-Fi hot spots or even at routers and switches.

An incorrectly placed edge could create unnecessary duplication of resources resulting in network inefficiency. Additionally, performance benefits must be sufficient enough to justify the effort or, in the case of caching content, storage capacity and latency must also be considered. The key is to place the edge so that compute, storage and network resources are available when and where needed.

In short, there is no easy or single answer to the “Where is the edge?” question.

Virtualization key to MEC

MEC will further leverage and scale an operator’s NFV architecture. Virtualization in combination with network slicing will make it possible for operators to dynamically move the location of the edge and manage traffic flows, while service orchestration will optimize the process to provide a seamless delivery of the application and consistent user experience. For instance, it might be useful to move the edge closer to users when traffic density is particularly high – such as a special event time (concert, sporting event, etc.) – then move the edge to a more centralized location once the event is over and traffic demands slow. However, moving the edge around will be based on operator preferences as well as other considerations such as latency, security or even regulatory.

MEC use cases and applications

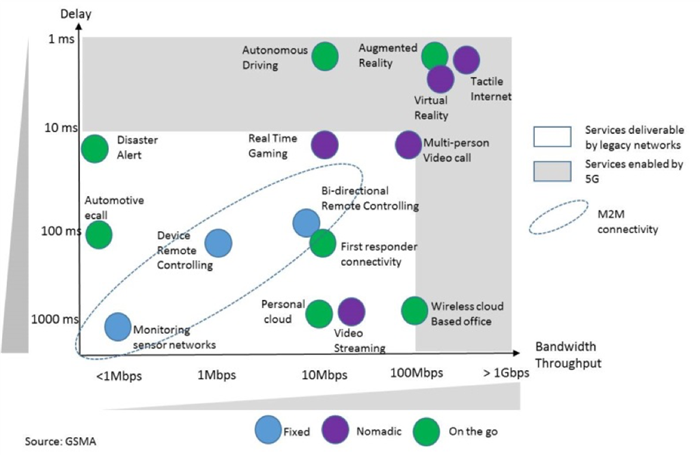

5G networks will enable applications that require both low latency and high bandwidth with MEC playing a starring role in making this happen. As shown, 5G will move beyond audio and data towards visual, tactile and cognitive, creating an environment that allows for anything-as-a-service.

5G applications

AT&T has recently stated how edge computing will be key to enabling a range of new applications within the 5G network. For example, MEC use cases such as augmented reality and virtual reality, which require rapid rendering. These will benefit from processing located at the edge rather than the main service to provide fast response times and low latency.

For autonomous cars, MEC will be able to push vehicle-to-everything applications, data and services from the central cloud to the edge – which is most likely a roadside unit. This is critical to enabling real-time communications for traffic, accident prevention and safety.

Other applications and use cases that will benefit from a high-bandwidth, low-latency, highly available environment and have a wide variety of service requirements include:

- Internet-of-things and its associated verticals

- Drone control

- Enterprise services

- Homeland security

- Content delivery

- Data caching

- Context-aware services

- Location-aware services

- Asset tracking

- Video surveillance

- Smart grid

- Critical communications

MEC is a key component for realizing the value of low latency to enable a wealth of new services and applications. Although the approach to MEC implementation will likely vary from operator to operator, the need for a distributed and dynamic edge – which will leverage and expand the investment in virtualization – will be key to optimizing the use of network resources while improving the delivery of content and applications to provide end users with a better quality of experience.