1970s: “That’s not real AI – that’s just heuristics. Real AI is an expert system.”

1990s: “That’s not real AI – that’s just an expert system. Real AI is machine learning.”

2010s: “That’s not real AI – that’s just machine learning. Real AI is neural networks.”

2025: “That’s not real AI – that’s just neural networks. Real AI is LLM models with RAG and MCP tools.”

I’m sure we’ve all heard or even said this kind of thing. It’s not easy to separate the goal of artificial intelligence from the latest implementation. We get swept up by the hopeful promise of each new technology wave, chasing the hype instead of the outcome. And because we struggle to crisply define human intelligence, we don’t maintain clear sight of the goal of artificial intelligence. It’s easier to say: “I’ll know it when I see it.”

That’s why the latest advancements with generative AI have so many industries excited. Tools like ChatGPT make AI approachable to the general populace in a way that no previous AI technology has. We know it because we see it. However, even though we think we’re seeing AI in action, the fact remains that 95% of projects using generative AI have yet to produce a return.

Don’t get me wrong – generative AI, and especially agentic AI, have me very excited about the future. But at the rate things have been moving lately, I’m sure next month there will be some new technology standing on the shoulders of these giants, reaching even greater heights.

How can you keep pace with the relentless evolution of AI and still create value?

Real artificial intelligence doesn’t specify a technology; it describes humankind’s aspiration for innovation. We want to take human roles, human tasks, human processes and have systems that perform them. You could say that any innovation throughout history has had this goal. Real AI must embody the hallmarks of human intelligence, regardless of the underlying technologies.

As we consider various human processes, we can boil down human intelligence into some key characteristics: whether that involves driving a car, assembling fire extinguishers in a factory, developing front-end GUI software or troubleshooting a subscriber’s internet. These processes require human expertise and collaboration to be performed accurately and safely. The proper action requires first sound and informed reasoning. However, only experts who have learned their craft have the basis for reasoning through related tasks. Experts explain the output of their work to other participants in the process, as well as teach novices to perform their role in the future. REAL human intelligence bears these four interrelated characteristics: Reasoning, Explaining, Acting and Learning.

REAL artificial intelligence must also bear these same hallmark characteristics of intelligence. REAL AI Reasons like a human, Explains its reasoning in human terms, Acts on that reasoning and Learns through this process to improve its reasoning.

In the past, AI technologies performed limited reasoning, explaining and acting with moderate success. But they’ve struggled mightily with scaling learning. Expert systems represented human expertise remarkably well, but harvesting that expertise met with insurmountable challenges. Machine learning and neural networks found more success modeling physical systems, but they scale with difficulty due to the massive number of samples required to train them.

What if, alongside the reasoning, explaining and acting provided by AI, we could also have it natively learn from experts?

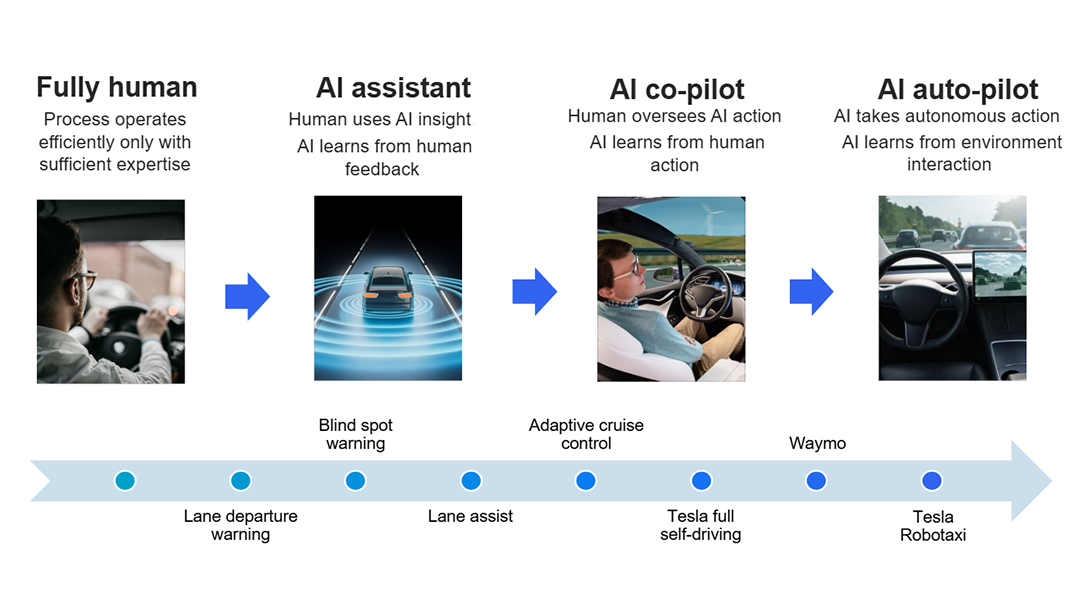

Industries like the automotive industry are figuring this out – and they are establishing strong patterns the rest of us can follow. I still remember the days when I drove, completely unassisted (yep, dating myself a bit there). But then cars introduced blind spot and lane departure warnings, making me a better driver. These capabilities assisted me, but I still drove completely on my own. Some car makers used these new capabilities to collect data about traffic conditions and my behavior, which in turn enabled them to provide capabilities like lane assist and adaptive cruise control. Rather than a mere assistant, the car became a copilot and continued learning how to take on more of my tasks.

And now we’re seeing the fruit of this progressive learning – cars are driving on their own. Automated agents weren’t introduced into the driving process on day one, but artificial intelligence was first employed as an assistant to the expert, then as a copilot to the expert, and then finally as the expert driver. This gradual evolution was about developing trust and meeting drivers where they were comfortable. Each phase allowed humans to gain confidence while the artificial intelligence learned how to reason, explain and act better, ultimately taking on a human’s role in a human’s process.