Machine learning is ideally suited to systems with complex interdependencies. Learning patterns from virtually unlimited data lakes and using such insights for self-optimization sits at the core of machine-learning-based analytics solutions. Over-the-top digital players have capitalized on these solutions to offer differentiated services based on an intricate web of user actions, preferences and so on.

While the success of digital-first companies serves as an excellent example, it’s by no means a blueprint for other industries. In fact, the truth is that machine learning ecosystems, including the big data promise, were neither conceived nor designed for manufacturing, utilities industries, or the oil and gas sector, to name a few. While these sectors continue to adapt and integrate machine learning frameworks in their tool chain, the benefits are limited by the absence of a pinpoint objective – a.k.a. domain-driven use case (think customer segmentation, fraud detection, load prediction, etc.)

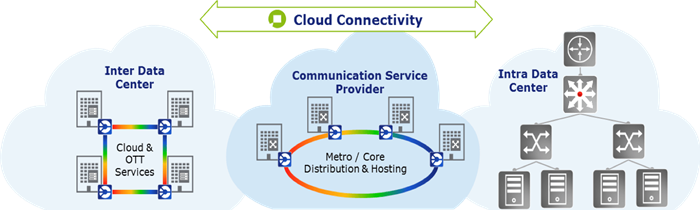

Cloud network prospects

Driven by extreme data rate requirements, extent and variety of services, quality of service, etc. cloud network architectures are evolving at a rapid pace. (See Figure 1.) These networks are expensive to manage and operate, and are highly distributed in nature, with diverse traffic loads and so on. That’s why operators are so desperate for opportunities to optimize cost and performance. However, given the highly saturated and competitive market landscape as well as the pressures emanating from both competitors and shareholders, making business sense of a (r)evolutionary solution is a tough nut to crack. So what is the financial justification of cloud network optimization beyond traditional means?

The answer is simple: Cloud networks nearly always operate based on pre-planned and static procedures, including design, planning, installation, and configuration cycles. While there are many pieces to this puzzle, this leads to the following key operational challenges:

- A reactive approach to problems, leading to over-engineered solutions, downtimes, etc.

- Limited scalability, be it in planning or service operations

- Under-utilization of precious network resources

- Manual and largely offline analysis at most levels of design and engineering

- High R&D-related expenses to manage and operate these networks

The aforementioned challenges, when seen in context, make a hand-in-glove use case for machine learning applications. Imagine this complex mesh of networks continually adjusting itself, learning from multiple interconnected user layer experiences, and coming up with autonomously optimized operating conditions. One of the fundamental aspects of cloud networking is the variability of user behaviour and traffic patterns, translating into dynamic capacity demands, among other things. A typical problem statement is to figure out the optimum planning, setup and service requirements that will be deployed on a given network. How can we leverage machine learning to address this?

Figure 1. Cloud connectivity landscape

Real-world network planning use case

Let me share a specific real-world example involving a physical layer transport capacity optimization problem. The typical procurement procedure followed by cloud owners is to issue RFIs to various system vendors, requesting feedback on their capabilities given certain boundary conditions driven by their specific use case. This is just the first level of engagement, however, as we’ll see, despite being early in the game, it plays a make-or-break role.

In these situations the request is usually simple: For a particular network segment with a certain length, fibre conditions, distance in-between huts, capacity requirements, etc., what’s the best a vendor can offer? From personal experience, I’ve found that vendors with a similar portfolio and level of technological expertise often end up with very different answers, leading to outright rejection of some vendors at the first hurdle.

Why does this happen? Two words: conservative design. Indeed, the industry norm for network planning is to devise engineering rules from a limited set of configurations, and come up with several scaling factors to attain a unifying model for network representation. On top of this model, several margins are added, including lifetime deterioration, sudden changes in network behaviour, etc., all of which come with their own assumptions and limitations. Magically, this soup does usually turn out okay for a fail-safe conservative network design. However, while quite good for traditional networking, and perhaps for some modern market segments, this approach does not work for the cloud. Cloud networks, which are under constant cost and service delivery pressures, aim to literally live on the edge. That’s why they require so much more.

Machine learning perspective

So, how could machine learning have helped? At the cost of oversimplification, machine learning models could be developed to capture and generalize known and unknown nonlinear relationships amongst several systems and subsystems. These are not traditional analytical or semi-analytical models physically modelling system behaviour, but rather a computational approach to complex system representation. For instance, a modulation scheme, together with a fibre type, error code class, among several other features, could be used to predict maximum system reach. There are two key benefits of such an approach:

- Scalability: cost and time savings on test and verification efforts

- Efficiency: self-optimization based on current network status

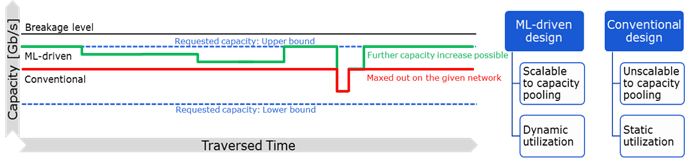

Advanced machine learning frameworks would enable auto-discovery of optimum operational states, getting rid of pessimistic static design condition on day one (see Figure 2). Furthermore, available capacity may be pooled to serve on-demand requirements, with an associated lower and upper bound based on the service level agreement. These models can be treated with data from diverse networks, allowing for global optimization possibilities. In addition, machine learning models can be regularly updated in offline or online workflows, fine-tuning their operation points. This is a prime example of the role of machine learning in upcoming cloud network optimizations. With the right algorithms, the system can be gradually taught to recognize any internal or external performance-related factors, optimize the use of resources, and improve the efficiency of the entire operational process. These developments allow for optimum network resource utilization at any given point, reduced operational costs, smarter planning conditions and optimal traffic management.

Figure 2. Machine learning vs. traditional frameworks

In conclusion, while this might sound promising; promises can be fragile. Indisputably, there are several major challenges. While the science of advancing machine learning algorithms is hard, even utilizing existing algorithms and frameworks for your new application can be troublesome. The difficulty is that it requires awareness of analytic framework trade-offs – e.g. algorithmic complexity, data uncertainty, etc., domain-based integration and data management systems, together with software design and architecture decisions. Moreover, monitoring thousands of entities, traffic behaviour and services across the networking stack is hardly trivial either. Finally, the financial justification must be driven by specific use cases, otherwise these solutions can only dig you deeper in the hole. All in all, a comprehensive strategy is needed right from inception to deployment and maintenance based on solutions driven by machine learning. Only then can the full benefits of the machine revolution be reaped.